Bringing Survey Methodology to Machine Learning: Effects of Data Collection Methods on Model Performance

Abstract

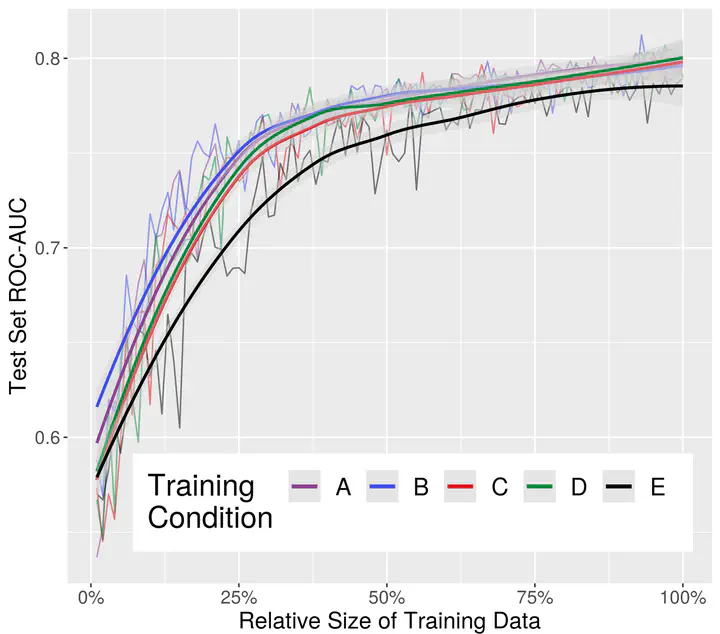

The instruments used to collect training data for machine learning models have many similarities to web surveys, such as the provision of a stimulus and fixed response options. Survey methodologists know that item and response option wording and ordering, as well as annotator effects, impact survey data. Our previous research showed that these effects also occur when collecting annotations for model training. Our new study builds on those results, exploring how instrument structure and annotator composition impact models trained on the resulting annotations. Using previously annotated Twitter data on hate speech, we collect annotations with five versions of an annotation instrument, randomly assigning annotators to versions. We then train ML models on each of the five resulting datasets. By comparing model performance across the instruments, we aim to understand 1) whether the way annotations are collected impacts the predictions and errors by the trained models, and 2) which instrument version leads to the most efficient model. In addition, we expand upon our earlier findings that annotators’ demographic characteristics impact the annotations they make. Our results emphasize the importance of careful annotation instrument design.